Most of us begin working on projects, websites, or applications that are already version controlled in one way or another. If you encounter one that’s not, it’s fairly easy to start from exactly where you are at the moment by starting your git repository from that point. Recently, however, I ran into an application which was only halfway version controlled. By that I mean, the actual application code was version controlled, but it was deployed from ansible code hosted on a server that was NOT version controlled. This made the deploy process frustrating for a number of reasons.

- If your deploy fails, is it the application code or the ansible code? If the latter, is it because something changed? If so, what? It’s nearly impossible to tell without version control.

- Not only did this application use ansible to deploy, it also used capistrano within the ansible roles.

- While the application itself had its own AMI that could be replicated across blue-green deployments in AWS, the source server performing the deploy did not — meaning a server outage could mean a devastating loss.

- Much of the ansible (and capistrano) code had not been touched or updated in roughly 4 years.

- To top it off, this app is a Ruby on Rails application, and Ruby was installed with rbenv instead of rvm, allowing multiple versions of ruby to be installed.

- It’s on a separate AWS account from everything else, adding the fun mystery of figuring out which services it’s actually using, and which are just there because someone tried something and gave up.

As you might imagine, after two separate incidents of late nights trying to follow the demented rabbit trail of deployment issues in this app, I had enough. I was literally Lucille Bluth yelling at this disaster of an app.

Do you ever just get this uncontrollable urge to take vengeance for the time you’ve lost just sorting through an unrelenting swamp of misery caused by NO ONE VERSION-CONTROLLING THIS THING FROM THE BEGINNING? Well, I did. So, below, read how I sorted this thing out.

Start with the basics

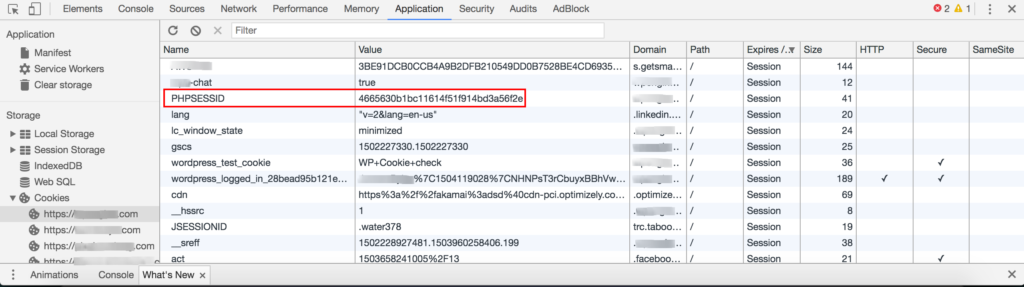

First of all, we created a repository for the ansible/deployment code and put the existing code on this server in place. Well, kind of. It turns out there were some keys and other secure things that shouldn’t be just checked into a git repo willy-nilly, so we had to do some strategic editing.

Then I did some mental white-boarding, planning out how to go about this metamorphosis. I knew the new version of this app’s deployment code would need a few things:

- Version control (obviously)

- Filter out which secure items were actually needed (there were definitely some superfluous ones), and encrypt them using ansible-vault.

- Eliminate the need for a bastion/deployment server altogether — AWS CodeDeploy, Bitbucket Pipelines, or other deployment tools can accomplish blue-green deployments without needing an entirely separate server for it.

- Upgrade the CentOS version in use (up to 7 from 6.5)

- Filter out unnecessary work-arounds hacked into ansible over the years (ANSIBLE WHAT DID THEY DO TO YOU!? :sob:)

- Fix the janky way Passenger was installed and switch it from httpd/apache as its base over to Nginx

- A vagrant/local version of this app — I honestly don’t know how they developed this app without this the whole time, but here we are.

So clearly I had my work cut out for me. But if you know me, you also know I will stop at nothing to fix a thing that has done me wrong enough times. I dove in.

Creating a vagrant

Since I knew what operating system and version I was going to build, I started with my basic ansible + vagrant template. I had it pull the regular “centos/7” box as our starting point. To start I was given a layout like this to work with:

+ app_dev

- deploy_script.sh

- deploy_script_old.sh

- bak_deploy_script_old_KEEP.sh

- playbook.yml

- playbook2.yml

- playbook3.yml

- adhoc_deploy_script.sh

+ group_vars

- localhost

- localhost_bak

- localhost_old

- localhost_template

+ roles

+ role1

+ tasks

- main.yml

+ templates

- application.yml

- database.yml

- role2

+ tasks

- main.yml

+ templates

- application.yml

- database.yml

- role3

+ tasks

- main.yml

+ templates

- application.yml

- database.yml

There were several versions of old vars files and scripts leftover from the years of non-version-control, and inside the group_vars folder there were sensitive keys that should not be checked into the git repo in plain text. Additionally, the “templates” seemed to exist in different forms in every role, even though only one role used it.

I re-arranged the structure and filtered out some old versions of things to start:

+ app_dev

- README.md

- Vagrantfile

+ provisioning

- web_playbook.yml

- database_playbook.yml

- host.vagrant

+ group_vars

+ local

- local

+ develop

- local

+ staging

- staging

+ production

- production

+ vaulted_vars

- local

- develop

- staging

- production

+ roles

+ role1

+ tasks

- main.yml

+ templates

- application.yml

- database.yml

- role2

+ tasks

- main.yml

- role3

+ tasks

- main.yml

+ scripts

- deploy_script.sh

- vagrant_deploy.sh

Inside the playbooks I lined out the roles in the order they seemed to be run from the deploy_script.sh, so they could be utilized by ansible in the vagrant build process. From there, it was a lot of vagrant up, finding out where it failed this time, and finding a better way to run the tasks (if they were even needed, as often times they were not).

Perhaps the hardest part was figuring out the capistrano deploy part of the deploy process. If you’re not familiar, capistrano is a deployment tool for Ruby, which allows you to remotely deploy to servers. It also does some things like keeping old versions of releases, syncing assets, and migrating the database. For a command as simple as bundle exec cap production deploy (yes, every environment was production to this app, sigh), there was a lot of moving parts to figure out. In the end I got it working by setting a separate “production.rb” file for the cap deploy to use, specifically for vagrant, which allows it to deploy to itself.

# 192.168.67.4 is the vagrant webserver IP I setup in Vagrant

role :app, %w{192.168.67.4}

role :primary, %w{192.168.67.4}

set :branch, 'develop'

set :rails_env, 'production'

server '192.168.67.4', user: 'vagrant', roles: %w{app primary}

set :ssh_options, {:forward_agent => true, keys: ['/path/to/vagrant/ssh/key']}

The trick here is allowing the capistrano deploy to ssh to itself — so make sure your vagrant private key is specified to allow this.

Deploying on AWS

To deploy on AWS, I needed to create an AMI, or image from which new servers could be duplicated in the future. I started with a fairly clean CentOS 7 AMI I created a week or so earlier, and went from there. I used ansible-pull to checkout the correct git repository and branch for the newly-created ansible app code, then used ansible-playbook to work through the app deployment sequence on an actual AWS server. In the original app deploy code I brought down, there were some playbooks that could only be run on AWS (requiring data from the ansible ec2_metadata_facts module to run), so this step also involved troubleshooting issues with these pieces that did not run on local.

After several prototype servers, I determined that the AMI should contain the base packages needed to install Ruby and Passenger (with Nginx), as well as rbenv and ruby itself installed into the correct paths. Then the deploy itself will install any additional packages added to the Gemfile and run the bundle exec cap production deploy, as well as swapping new servers into the ELB (elastic load balancer) on AWS once deemed “healthy.”

This troubleshooting process also required me to copy over the database(s) in use by the old account (turns out this is possible with the “Share” option for RDS snapshots from AWS, so that was blissfully easy), create a new Redis instance, copy over all the s3 assets to a bucket in the new account, and create a Cloudfront instance to serve those assets, with the appropriate security groups to lock all these services down. Last, I updated the vaulted variables in ansible to the new AMIs, RDS instances, Redis instances, and Cloudfront/S3 instances to match the new ones. After verifying things still worked as they should, I saved the AMI for easily-replicable future use.

Still to come

A lot of progress has been made on this app, but there’s more still to come. After thorough testing, we’ll need to switch over the DNS to the new ELB CNAME and run entirely from the new account. And there is pipeline work in the future too — whereas before this app was serving as its own “blue-green” deployment using a “bastion” server of sorts, we’ll now be deploying with AWS CodeDeploy to accomplish the same thing. I’ll be keeping the blog updated as we go. Until then, I can rest easy knowing this app isn’t quite the hot mess I started with.