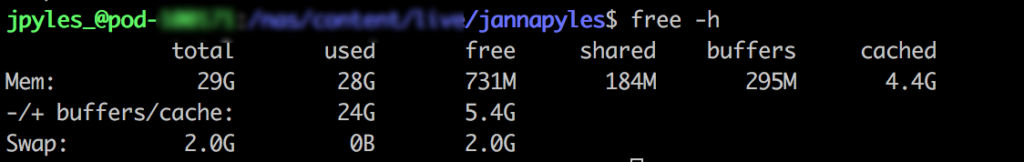

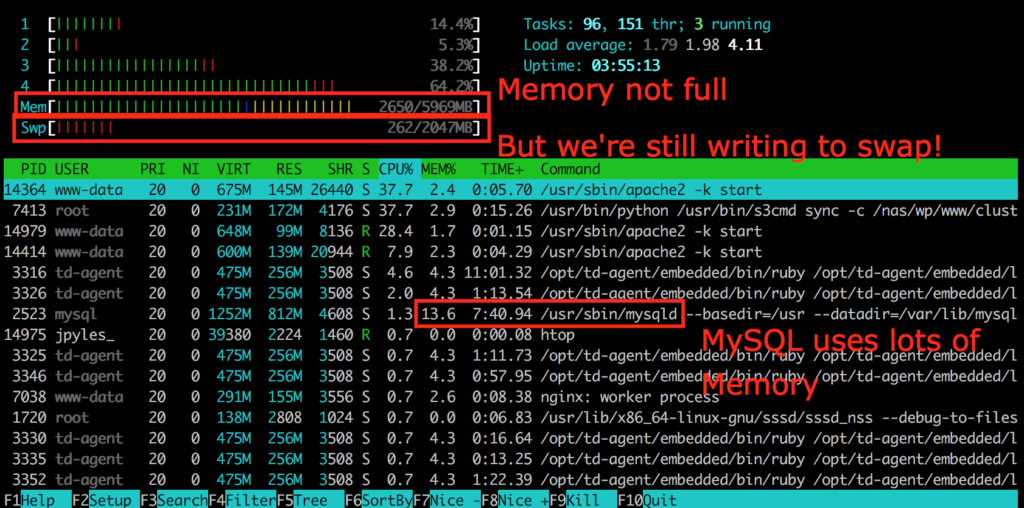

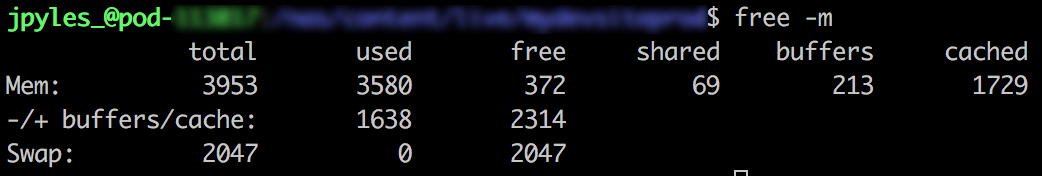

As you may know or have noticed, Memory utilization can be a difficult to truly understand. While tools like free -m can certainly help, they aren’t necessarily a true indication of health or unhealth. For example, if I see 90% Memory utilization, that’s not exactly an indication that it’s time to add more resources. The nature of Memory is to store temporary data for faster access the next time it is needed, and because of this, it tends to hold onto as much temporary data as it can, until it needs to purge something out to make more space.

About the InnoDB Buffer Pool

InnoDB (a table storage engine for MySQL), has a specific pool of Memory allocated to MySQL processes involving InnoDB tables called the InnoDB Buffer Pool. Generally speaking, it’s safest to have an InnoDB Buffer Pool at least the same size as your database(s) on your server environment to ensure all tables can fit into the available Memory.

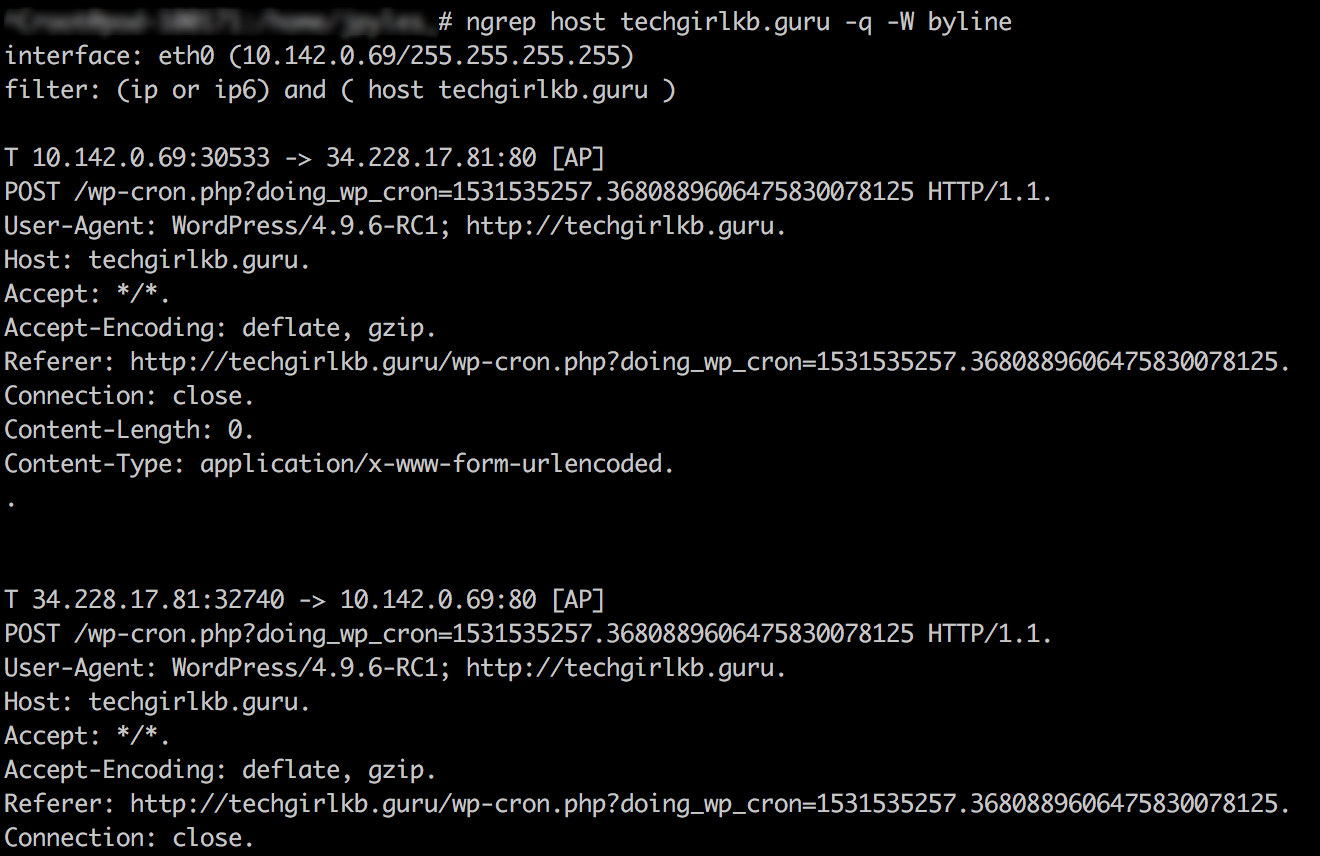

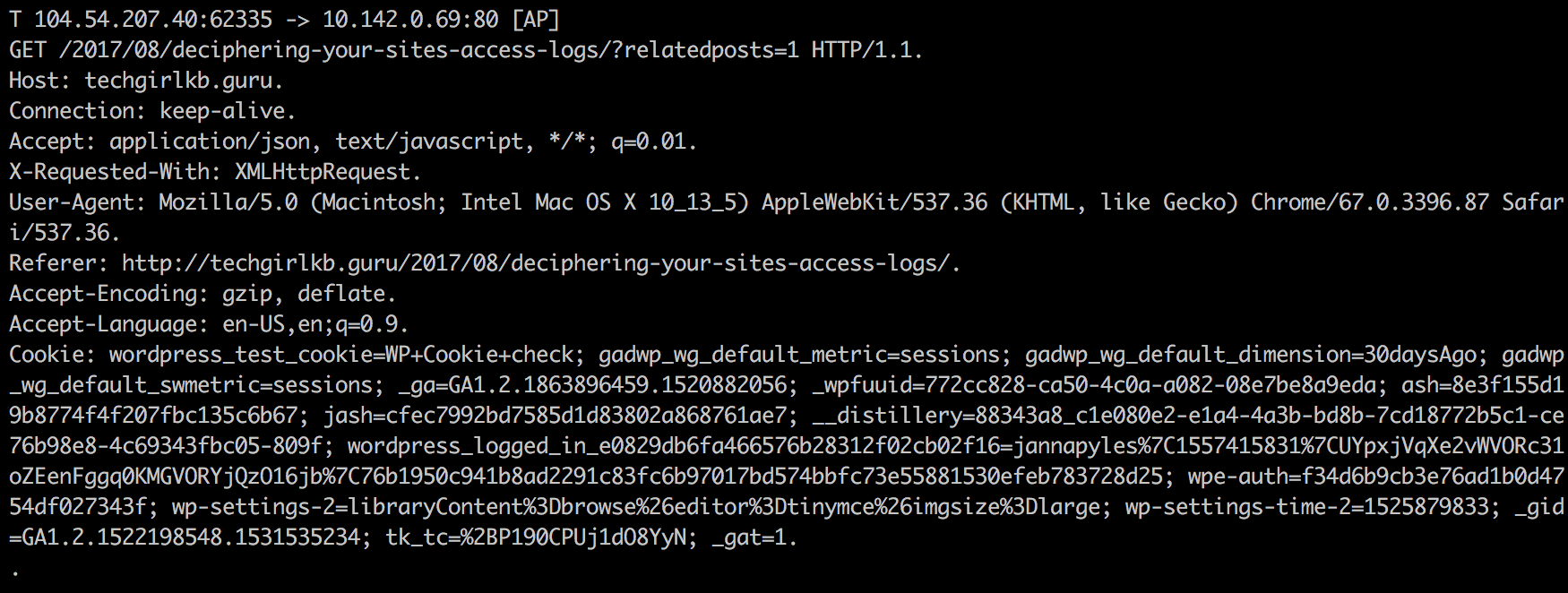

As queries access various database tables, they are added to Memory in the InnoDB Buffer Pool for faster access by CPU processes. And if the tables being stored in Memory are larger than what is allocated, the tables will be written to swap instead. As I covered in my recent article on Memory and IOWait, that makes for increasingly painful performance issues.

The InnoDB Buffer Pool is clingy

Yep, that’s right. Like I mentioned above, Memory tends to hold onto the things it’s storing for faster access. That means it doesn’t purge items out of Memory until it actually needs more space to do so. Instead, it uses an algorithm called Least Recently Used (LRU) to identify the least-needed items in cache, and purge that one item out to make room for the next item. So unless your server has simply never had the need to store much in the InnoDB Buffer Pool, it will almost always show high utilization–and that’s not a bad thing! Not unless you are also seeing swap usage. That means something (in my experience, generally MySQL) is overusing its allocated Memory and is being forced to write to disk instead. And if that disk is a rotational disk (SATA/HDD) instead of SSD, that can spiral out of control very easily.

All this to say, the InnoDB Buffer Pool will hang onto stuff, and that’s because it’s doing its job–storing database tables for faster access the next time they are needed. So don’t take high utilization as a sign of outright unhealth! Be sure to factor swap usage into the equation as well.

Allocating Memory to the InnoDB Buffer Pool

InnoDB Buffer Pool size and settings are typically configured in your /etc/mysql/my.cnf file. Here you can set variables like:

innodb-buffer-pool-size = 256M

innodb_io_capacity = 3000

innodb_io_capacity_max = 5000

…And more! There’s a whole host of settings you can configure for your InnoDB Buffer Pool in the MySQL documentation. General guidelines for configuring the pool settings: Ensure it’s smaller than the total amount of Memory on your server, and ensure it’s larger or the same size as the database(s) on your server. From there you can perform testing on your website while fine tuning the settings to see which size is most effective for performance.

Have any comments or questions? Experience to share regarding the InnoDB Buffer Pool? Let me know in the comments, or Contact Me.