If you’re using a performance-optimized server ecosystem, there’s a good chance that you’re using at least one proxy relationship. Whether the proxy is from another server or firewall, or from one web server to another within the same environment, broken proxies can sometimes be nebulous to troubleshoot.

Today we’ll look specifically at the Nginx and Apache proxy relationship, when using both web servers. Curious about the benefits of using Nginx, Apache, or both? Check out the Web Server Showdown.

What is a proxy?

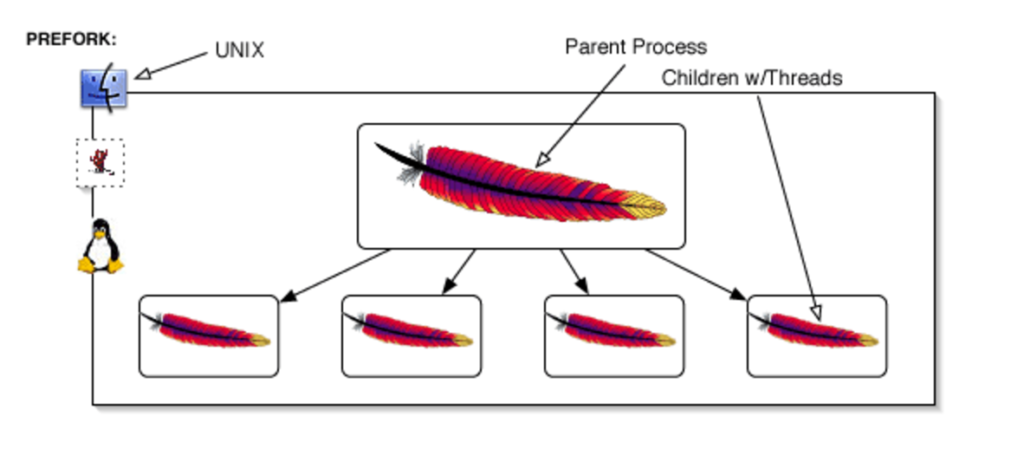

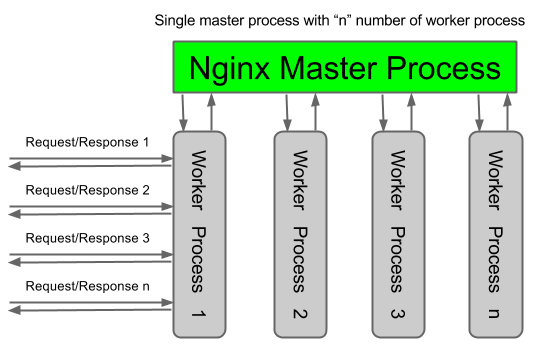

Before we dive in too far, let’s examine: what is a proxy? A proxy, sometimes referred to as an “application gateway,” is a web server that acts as an intermediary to another web server or service. In our example Nginx functions as a proxy server, passing requests to your caching mechanism or to Apache. Apache processes the request, passing it back to Nginx. Nginx in turn passes it to the original requestor.

What is a broken proxy?

Now that we understand the proxy, we can answer the question: what is a broken proxy? A broken proxy refers to when the intermediary service passes a request, but doesn’t get it back. So in our example, Nginx passes the request to Apache. Something happens at the Apache-level to where the request is now gone. Apache now has nothing to hand back to Nginx.

Nginx however is still responsible to the original requestor to tell them… something! It responds by telling the original requestor that it had a bad gateway (proxy) with a 502 or 504 HTTP Response.

Troubleshooting broken proxies

A common problem with proxies is that they can be difficult to troubleshoot. How do you know which service did not respond to the request Nginx (the proxy server) sent? And how do you know why the service did not complete the request?

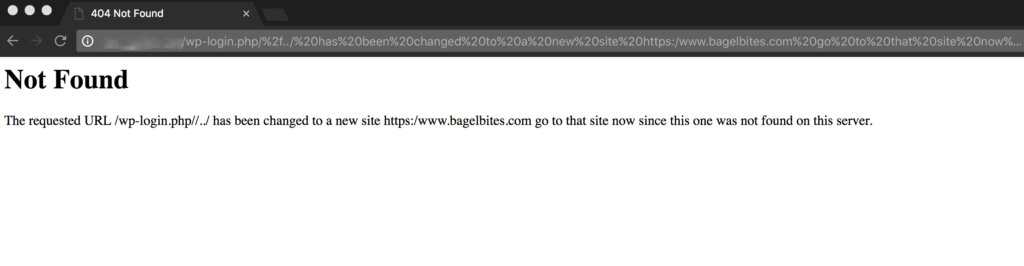

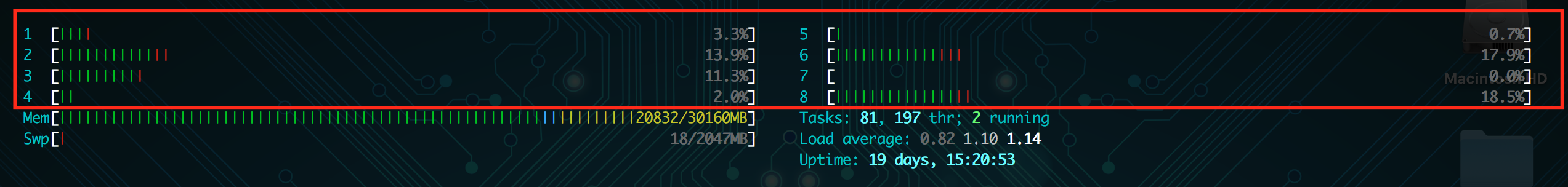

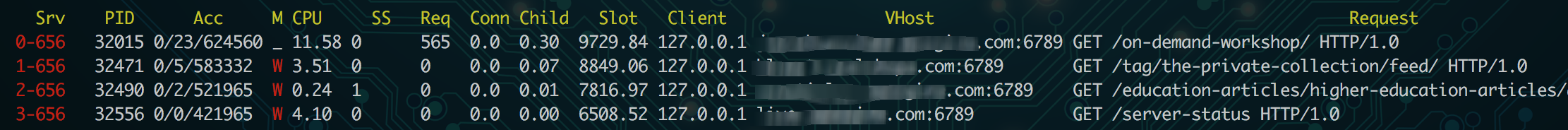

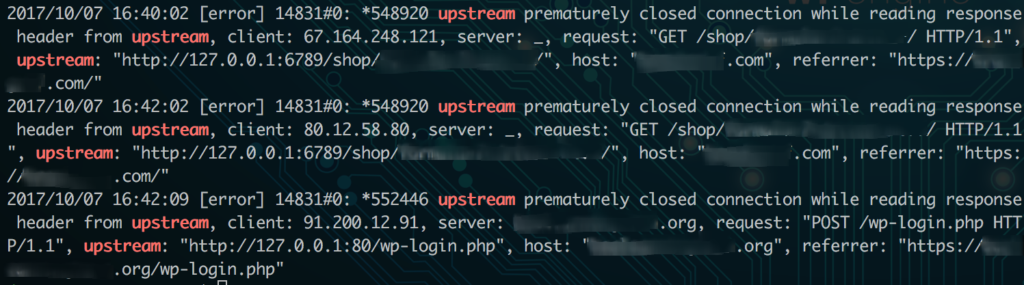

A good place to start is your logs. Your Nginx error logs will indicate when an upstream error occurred, and may help offer some context, such as the port the request was sent to. These logs will usually be in the log path on your server (/var/log/nginx/ for many), labeled error.log.

Your Nginx error log files will usually show which port produced the upstream error, which is your first clue. In my example, I can look for which service is running on that port. If I know Apache or my caching mechanism is operating on that port, I can know that service is responsible for the error.

6789 in the example in the picture was my Apache service, so I know Apache did not fulfill the request. Now I can check my Apache error logs for more information. These error logs are also generally where your logs are stored on the server, like /var/log/apache2/error.log. If you have multiple sites on the same server, you may have each site’s errors logged in separate files here instead.

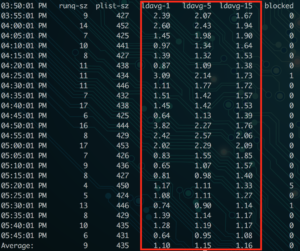

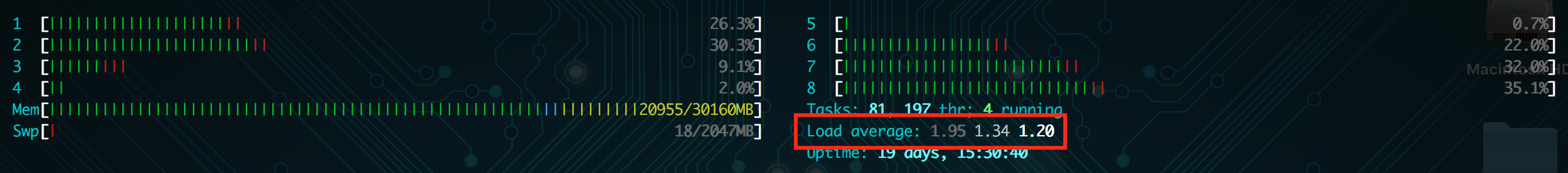

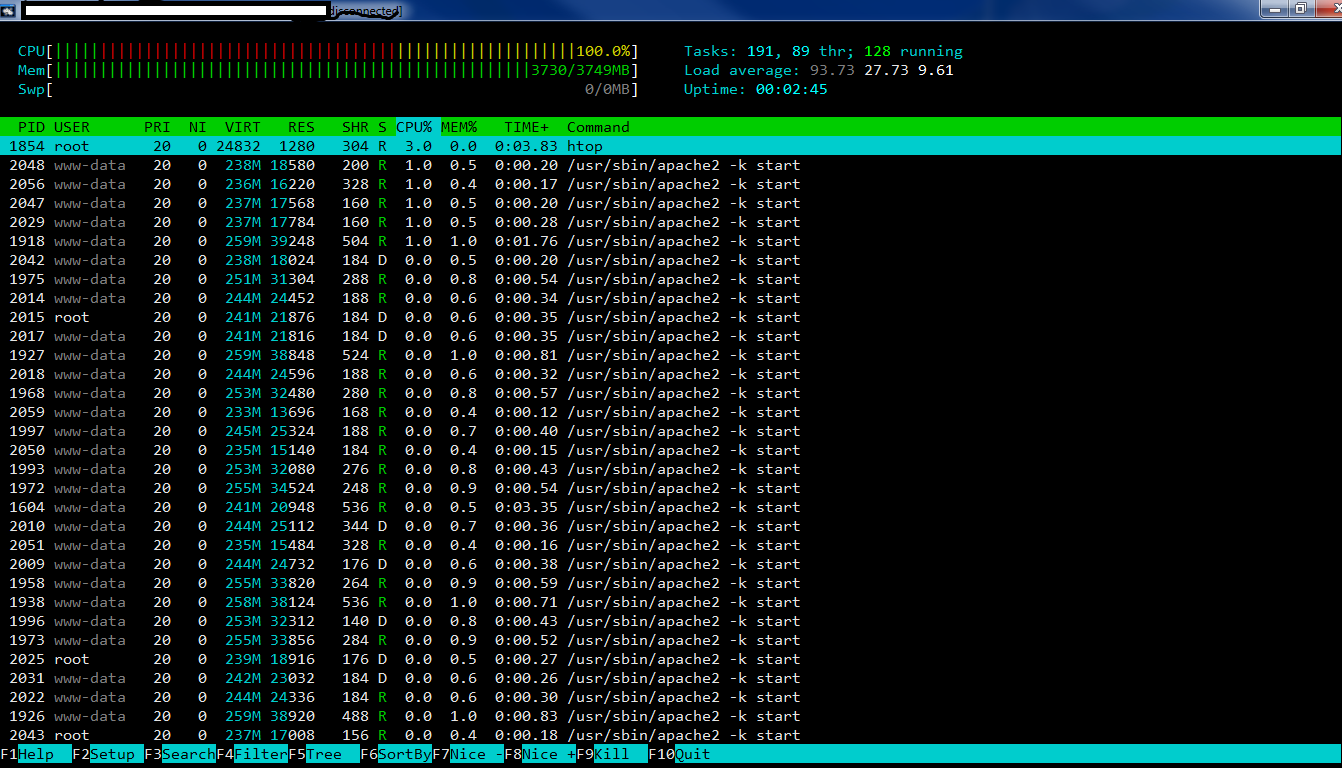

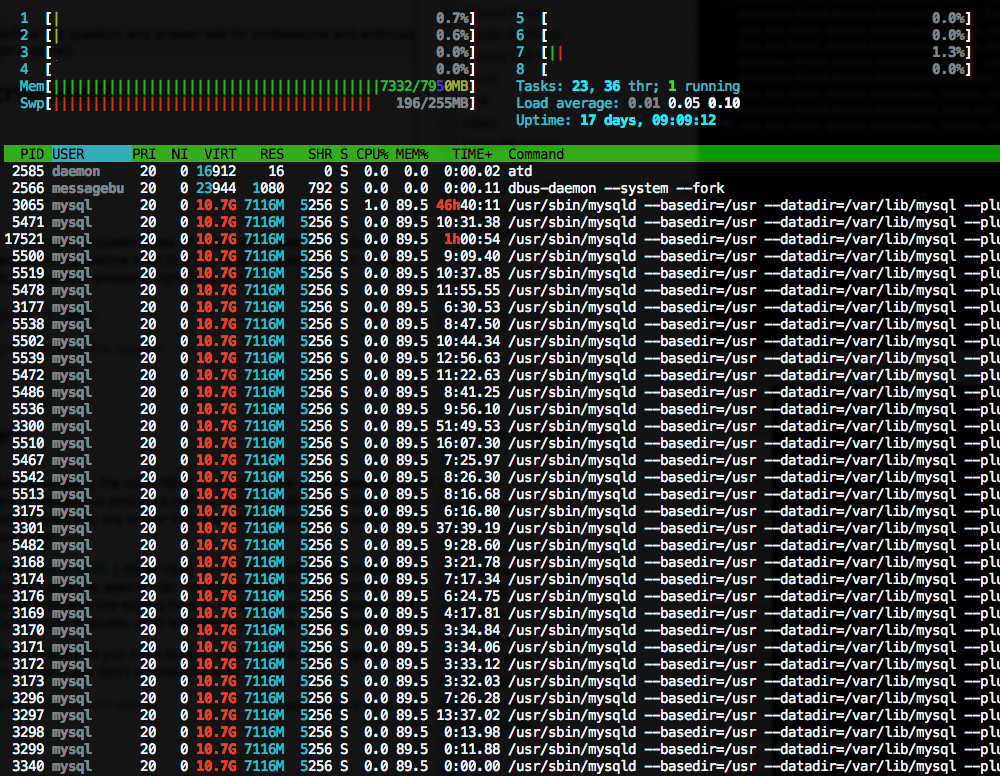

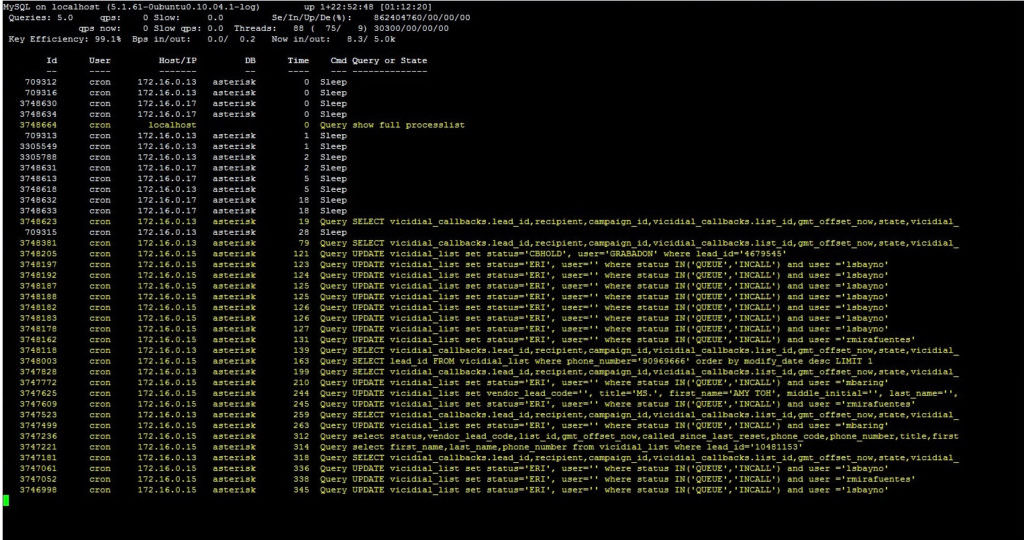

Some common reasons Apache might not complete a request:

- The request timed out (max_execution_time reached)

- The request used too much Memory and was killed

- A segmentation fault occurred

- The Apache service is not on or currently restarting

Many times your Apache error logs will let you know if the above is causing the issue. If it doesn’t, you may need to consult your firewall or security services on the server to see if the requests were blocked for other security reasons.

Caveats

Keep in mind: even if Apache experiences errors (like a 500 error due to theme or plugin code), as long as Apache entirely processes the request it will simply pass this HTTP status code up to Nginx to serve to your users. So remember, 502 errors will typically only result if there is no response from Apache back to Nginx.

And also remember that broken proxies are not always within the same server environment. If you use a firewall or full site CDN service, the requests are proxied through these external servers as well. If you experience a 502 error and can’t find that request in your access logs, looking to the logs on your external firewall should be your next step.

Have you experienced issues with 502 errors on your server? What was the cause? Have any other solutions or recommendations to include? Let me know in the comments, or Contact Me.